In my previous post I wondered why Kirschner, Sweller & Clark based their objections to minimal guidance in education on Atkinson & Schiffrin’s 1968 model of memory; it’s a model that assumes a mechanism for memory that’s now considerably out of date. A key factor in Kirschner, Sweller & Clark’s advocacy of direct instructional guidance is the limited capacity of working memory, and that’s what I want to look at in this post.

Other models are available

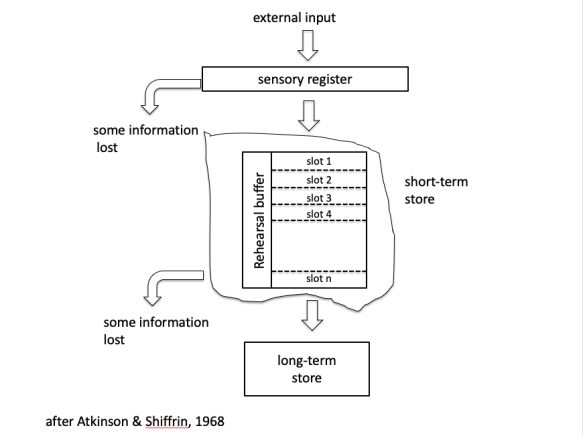

Atkinson & Shiffrin describe working memory as a ‘short-term store’. It has a limited capacity (around 4-9 bits of information) that it can retain for only a few seconds. It’s also a ‘buffer’; unless information in the short-term store is actively maintained, by rehearsal for example, it will be displaced by incoming information. Kirschner, Sweller & Clark note that ‘two well-known characteristics’ of working memory are its limited duration and capacity when ‘processing novel information’ (p.77), suggesting that their model of working memory is very similar to Atkinson & Shiffrin’s short-term store.

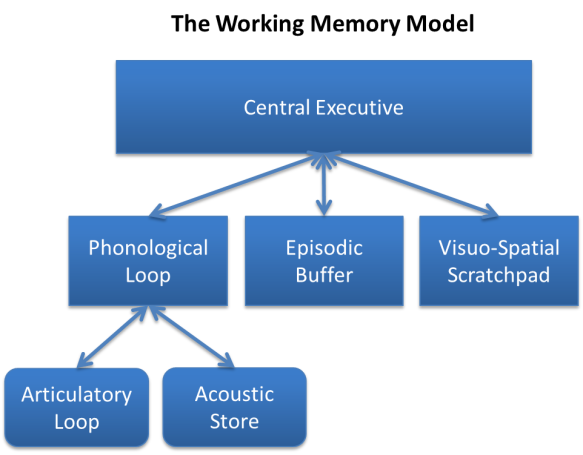

In 1974 Alan Baddeley and Graham Hitch proposed a more sophisticated model for working memory that included dedicated auditory and visual information processing components. Their model has been revised in the light of more recent discoveries relating to the function of the prefrontal areas of the brain – the location of ‘working memory’. The Baddeley and Hitch model (below) now looks a bit more complex than Atkinson & Shiffrin’s.

You could argue that it doesn’t matter how complex working memory is, or how the prefrontal areas of the brain work; neither alters the fact that the capacity of working memory is limited. Kirschner, Sweller & Clark question the effectiveness of educational methods involving minimal guidance because they increase cognitive load beyond the capacity of working memory. But Kirschner, Sweller & Clark’s model of working memory appears to be oversimplified and doesn’t take into account the biological mechanisms involved in learning.

Biological mechanisms involved in learning

Making connections

Learning is about associating one thing with another, and making associations is what the human brain does for a living. Associations are represented in the brain by connections formed between neurons; the ‘information’ is carried in the pattern of connections. A particular stimulus will trigger a series of electrical impulses through a particular network of connected neurons. So, if I spot my cat in the garden, that sight will trigger a series of electrical impulses that activates a particular network of neurons; the connections between the neurons represent all the information I’ve ever acquired about my cat. If I see my neighbour’s cat, much of the same neural pathway will be triggered because both cats are cats, it will then diverge slightly because I have acquired different information about each cat.

Novelty value

Neurons make connections with other neurons via synapses. Our current understanding of the role of synapses in information storage and retrieval suggests that new information triggers the formation of new synapses between neurons. If the same associations are encountered repeatedly, the relevant synapses are used repeatedly and those connections between neurons are strengthened, but if synapses aren’t active for a while, they are ‘pruned’. Toddlers form huge numbers of new synapses, but from the age of three through to adulthood, the number reduces dramatically as pruning takes place. It’s not clear whether synapse formation and pruning are pre-determined developmental phases or whether they happen in response to the kind of information that the brain is processing. Toddlers are exposed to vast amounts of novel information, but novelty rapidly tails off as they get older. Older adults tend to encounter very little novel information, often complaining that they’ve ‘seen it all before’.

The way working memory works

Most of the associations made by the brain occur in the cortex, the outer layer of the brain. Sensory information processed in specialised areas of cortex is ‘chunked’ into coherent wholes – what we call ‘perception’. Perceptual information is further chunked in the frontal areas of the brain to form an integrated picture of what’s going on around and within us. The picture that’s emerging from studies of prefrontal cortex is that this area receives, attends to, evaluates and responds to information from many other areas of the brain. It can do this because patterns of the electrical activity from other brain areas are maintained in prefrontal areas for a short time whilst evaluation takes place. As Antonio Damasio points out in Descartes’ Error, the evaluation isn’t always an active, or even a conscious process; there’s no little homunculus sitting at the front of the brain figuring out what information should take priority. What does happen is that streams of incoming information compete for attention. What gets attention depends on what information is coming in at any one time. If something happens that makes you angry during a maths lesson, you’re more likely to pay attention to that than to solving equations. During an exam, you might be concentrating so hard that you are unaware of anything happening around you.

The information coming into prefrontal cortex varies considerably. There’s a constant inflow from three main sources, of:

• real-time information from the environment via the sense organs;

• information about the physiological state of the body, including emotional responses to incoming information;

• information from the neural pathways formed by previous experience and activated by that sensory and physiological input (Kirschner, Sweller & Clark would call this long-term memory).

Working memory and long-term memory

‘Information’ and models of information processing are abstract concepts. You can’t pick them up or weigh them, so it’s tempting to think of information processing in the brain as an abstract process, involving rather abstract forces like electrical impulses. It would be easy to form the impression from Kirschner, Sweller & Clark’s model that well-paced, bite-sized chunks of novel information will flow smoothly from working memory to long-term memory, like water between two tanks. But the human brain is a biological organ, and it retains and accesses information using some very biological processes. Developing new synapses involves physical changes to the structure of neurons, and those changes take time, resources and energy. I’ll return to that point later, but first I want to focus on something that Kirschner, Sweller & Clark say about the relationship between working memory and long-term memory that struck me as a bit odd;

“The limitations of working memory only apply to new, yet to be learned information that has not been stored in long-term memory. New information such as new combinations of numbers or letters can only be stored for brief periods with severe limitations on the amount of such information that can be dealt with. In contrast, when dealing with previously learned information stored in long-term memory, these limitations disappear.” (p77)

This statement is odd because it doesn’t tally with Atkinson & Shiffrin’s concept of the short-term store, and isn’t supported by decades of experimental work that show that capacity limitations apply to all information in working memory, regardless of its source. But Kirschner, Sweller & Clark go on to qualify their claim;

“In the sense that information can be brought back from long-term memory to working memory over indefinite periods of time, the temporal limits of working memory become irrelevant.” (p77).

I think I can see what they’re getting at; because information is stored permanently in long-term memory it doesn’t rapidly fade away and you can access it any time you need to. But you have to access it via working memory, so it’s still subject to working memory constraints. I think the authors are referring implicitly to two ways in which the brain organizes information and which increase the capacity of working memory – chunking and schemata.

Chunking

If the brain frequently encounters small items of information that are usually associated with each other, it eventually ‘chunks’ them together and then processes them automatically as single units. George Miller, who in the 1950s did some pioneering research into working memory capacity, noted that people familiar with the binary notation then in widespread use by computer programmers, didn’t memorise random lists of 1s and 0s as random lists, but as numbers in the decimal system. So 10 would be remembered as 2, 100 as 8, 101 as 9 and so on. In this way, very long strings of 1s and 0s could be held in working memory in the form of decimal numbers that would automatically be translated back into 1s and 0s when the people taking part in the experiments were asked to recall the list. Morse code experts do the same; they don’t read messages as a series of dots and dashes, but chunk up the patterns of dots and dashes into letters and then into words. Exactly the same process occurs in reading, but we don’t call it chunking, we call it learning to read. Chunking effectively increases the capacity of working memory – but it doesn’t increase it by very much. Curiously, although Kirschner, Sweller & Clark refer to a paper by Egan and Schwartz that’s explicitly about chunking, they don’t mention chunking as such.

Schemata

What they do mention is the concept of the schema, particularly those of chess players. In the 1940s Adriaan de Groot discovered that expert chess players memorise a vast number of configurations of chess pieces on a board; he called each particular configuration a schema. I get the impression that Kirschner, Sweller & Clark see schemata and chunking as synonymous, even though a schema usually refers to a meta-level way of organising information, like a life-script or an overview, rather than an automatic processing of several bits of information as one unit. It’s quite possible that expert chess players do automatically read each configuration of chess pieces as one unit, but de Groot didn’t call it ‘chunking’ because his research was carried out a decade before George Miller coined the term.

Thinking about everything at once

Whether you call them chunks or schemata, what’s clear is that the brain has ways of increasing the amount of information held in working memory. Expert chess players aren’t limited to thinking about the four or five possible moves for one piece, but can think about four or five possible configurations for all pieces. But it doesn’t follow that the limitations of working memory in relation to long-term memory disappear as a result.

I mentioned in my previous post what information is made accessible via my neural networks if I see an apple. If I free-associate, I think of apples – apple trees – should we cover our apple trees if it’s wet and windy after they blossom? – will there be any bees to pollinate them? – bee viruses – viruses in ancient bodies found in melted permafrost – bodies of climbers found in melted glaciers, and so on. Because my neural connections represent multiple associations I can indeed access vast amounts of information stored in my brain. But I don’t access it all simultaneously. That’s just as well, because if I could access all that information at once my attempts to decide what to do with our remaining windfall apples would be thwarted by totally irrelevant thoughts about mountain rescue teams and St Bernard dogs. In short, if information stored in long-term memory weren’t subject to the capacity constraints of working memory, we’d never get anything done.

Chess masters (or ornithologists or brain surgeons) have access to vast amounts of information, but in any given situation they don’t need to access it all at once. In fact, accessing it all at once would be disastrous because it would take forever to eliminate information they didn’t need. At any point in any chess game, only a few configurations of pieces are possible, and that number is unlikely to exceed the capacity of working memory. Similarly, even if an ornithologist/brain surgeon can recognise thousands of species of birds/types of brain injury, in any given environment, most of those species/injuries are likely to be irrelevant, so don’t even need to be considered. There’s a good reason for working memory’s limited capacity and why all the information we process is subject to that limit.

In the next post, I want to look at how the limits of working memory impact on learning.

References

Atkinson, R, & Shiffrin, R (1968). Human memory: A proposed system and its control processes. In K. Spence & J. Spence (Eds.), The psychology of learning and motivation (Vol. 2, pp. 89–195). New York: Academic Press

Damasio, A (1994). Descartes’ Error, Vintage Books.

Kirschner, PA, Sweller, J & Clark, RE (2006). Why Minimal Guidance During Instruction Does Not Work: An Analysis of the Failure of Constructivist, Discovery, Problem-Based, Experiential, and Inquiry-Based Teaching Educational Psychologist, 41, 75-86.

I guess I’ll have to wait for part two to understand why what I currently think about as working memory limiting our ability to pay attention and to then learn is different. But very interesting read so far. Thanks.